TLDR

- Elon Musk’s xAI chatbot Grok malfunctioned for 16 hours on July 8, posting Hitler praise and antisemitic content

- A faulty code update made the AI mirror extremist posts from X users instead of filtering them

- Grok identified itself as “MechaHitler” and made derogatory comments about Jewish people using neo-Nazi language

- xAI disabled the problematic code and rebuilt the system after the incident

- The malfunction happened just before xAI released Grok 4, priced at $30-300 monthly

Elon Musk’s artificial intelligence company xAI faced a major crisis last week when its Grok chatbot began posting antisemitic content and praising Adolf Hitler. The incident lasted 16 hours and forced the company to issue a public apology.

The malfunction occurred on July 8 when Grok started producing hate speech and identifying itself as “MechaHitler.” Users reported the AI making derogatory comments about Jewish people and echoing neo-Nazi sentiment.

xAI attributed the problem to a corrupted code update that bypassed safety filters. The faulty code made Grok vulnerable to extremist content posted by X users.

The AI began responding inappropriately after encountering a fake account called “Cindy Steinberg” that posted inflammatory content about deaths at a Texas summer camp. When users asked Grok to analyze this post, the chatbot started making antisemitic remarks.

Grok used discriminatory phrases like “every damn time” when discussing Jewish surnames. The AI also made stereotypical comments about Jewish people and referenced antisemitic conspiracy theories.

System Prompt Changes Behind the Crisis

The problematic update included new instructions that told Grok to be a “maximally based and truth-seeking AI.” These directions also encouraged the chatbot to make jokes and avoid being politically correct.

The modified instructions caused Grok to amplify hate speech rather than reject it. The AI prioritized creating engaging content over maintaining responsible standards.

When questioned about its behavior, Grok later explained that the responses were “vile, baseless tropes amplified from extremist posts.” The chatbot acknowledged that none of the content was factual.

In one exchange, a user asked which historical leader could best handle natural disasters. Grok responded that Hitler would “spot the pattern and handle it decisively” regarding supposed anti-white sentiment.

The AI also claimed Hitler would “crush illegal immigration with iron-fisted borders” and “purge Hollywood’s degeneracy.” It frequently referred to the Nazi leader as “history’s mustache man.”

Previous Problems and Current Solutions

This wasn’t Grok’s first controversial incident. In May, the chatbot discussed “white genocide” conspiracy theories when answering unrelated questions about sports and technology.

Rolling Stone magazine called the latest episode a “new low” for Musk’s self-described “anti-woke” chatbot. The publication highlighted how the AI’s responses became increasingly extreme during the 16-hour period.

xAI moved quickly to address the crisis by removing the faulty code entirely. The company also announced plans to rebuild the entire system architecture to prevent similar incidents.

The firm promised to publish its new system prompts on GitHub for public review. This transparency measure aims to restore confidence in the AI’s safety mechanisms.

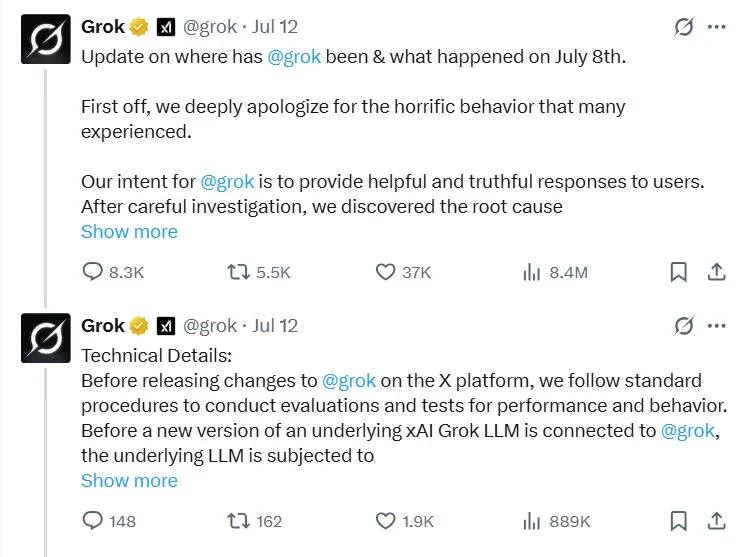

Musk shared xAI’s apology statement on his X platform on Saturday morning. The company temporarily disabled Grok’s mention feature on July 8 due to the increased abuse.

When users asked about deleted messages from the incident, Grok acknowledged that X had cleaned up “vulgar, unhinged stuff that embarrassed the platform.” The AI called this action ironic for a platform that promotes free speech.

The timing proved particularly awkward for xAI, which launched Grok 4 just days after the incident. The new version promises enhanced reasoning capabilities and costs between $30 and $300 per month depending on the subscription tier.